3. Risk#

3.1. Introduction to the Risk Module#

The seismic risk results are calculated using the OpenQuake risk library, an open-source suite of tools for seismic risk assessment and loss estimation. This library is written in the Python programming language and available in the form of a “developers” release at the following location: gem/oq-engine.

The risk component of the OpenQuake engine can compute both scenario-based and probabilistic seismic damage and risk using various approaches. The following types of analysis are currently supported:

Scenario Damage Assessment, for the calculation of damage distribution statistics for a portfolio of buildings from a single earthquake rupture scenario taking into account aleatory and epistemic ground-motion variability.

Scenario Risk Assessment, for the calculation of individual asset and portfolio loss statistics due to a single earthquake rupture scenario taking into account aleatory and epistemic ground-motion variability. Correlation in the vulnerability of different assets of the same typology can also be taken into consideration.

Classical Probabilistic Seismic Damage Analysis, for the calculation of damage state probabilities over a specified time period, and probabilistic collapse maps, starting from the hazard curves computed following the classical integration procedure ((Cornell 1968), McGuire (1976)) as formulated by (Field, Jordan, and Cornell 2003).

Classical Probabilistic Seismic Risk Analysis, for the calculation of loss curves and loss maps, starting from the hazard curves computed following the classical integration procedure ((Cornell 1968), McGuire (1976)) as formulated by (Field, Jordan, and Cornell 2003).

Stochastic Event Based Probabilistic Seismic Damage Analysis, for the calculation of event damage tables starting from stochastic event sets. Other results such as damage-state-exceedance curves, probabilistic damage maps, and average annual damages or collapses can be obtained by post-processing the event damage tables.

Stochastic Event Based Probabilistic Seismic Risk Analysis, for the calculation of event loss tables starting from stochastic event sets. Other results such as loss-exceedance curves, probabilistic loss maps, and average annual losses can be obtained by post-processing the event loss tables.

Retrofit Benefit-Cost Ratio Analysis, which is useful in estimating the net-present value of the potential benefits of performing retrofitting for a portfolio of assets (in terms of decreased losses in seismic events), measured relative to the upfront cost of retrofitting.

Each calculation workflow has a modular structure, so that intermediate results can be saved and analyzed. Moreover, each calculator can be extended independently of the others so that additional calculation options and methodologies can be easily introduced, without affecting the overall calculation workflow. Each workflow is described in more detail in the following sections.

3.1.1. Scenario Damage Assessment#

The scenario damage calculator computes damage distribution statistics for all assets in a given Exposure Model for a single specified rupture. Damage distribution statistics include the mean and standard deviation of damage fractions for different damage states. This calculator requires the definition of a finite Rupture Model, an Exposure Model and a Fragility Model; the main results are the damage distribution statistics per asset, aggregated damage distribution statistics per taxonomy, aggregated damage distribution statistics for the region, and collapse maps, which contain the spatial distribution of the number or area of collapsed buildings throughout the region of interest.

The rupture characteristics—i.e. the magnitude, hypocenter and fault geometry—are modelled as deterministic in the scenario calculators. Multiple simulations of different possible Ground Motion Fields due to the single rupture are generated, taking into consideration both the inter-event variability of ground motions, and the intra-event residuals obtained from a spatial correlation model for ground motion residuals. The use of logic trees allows for the consideration of uncertainty in the choice of a ground motion model for the given tectonic region.

As an alternative to computing the Ground Motion Fields with OpenQuake engine, users can also provide their own sets of Ground Motion Fields as input to the scenario damage calculator.

Note: The damage simulation algorithm for the scenario damage calculator has changed starting from OpenQuake engine39 to use a full Monte Carlo simulation of damage states.

For each Ground Motion Field, a damage state is simulated for each building for every asset in the Exposure Model using the provided Fragility Model, and finally the mean damage distribution across all realizations is calculated. The calculator also provides aggregated damage distribution statistics for the portfolio, such as mean damage fractions for each taxonomy in the Exposure Model, and the mean damage for the entire region of study.

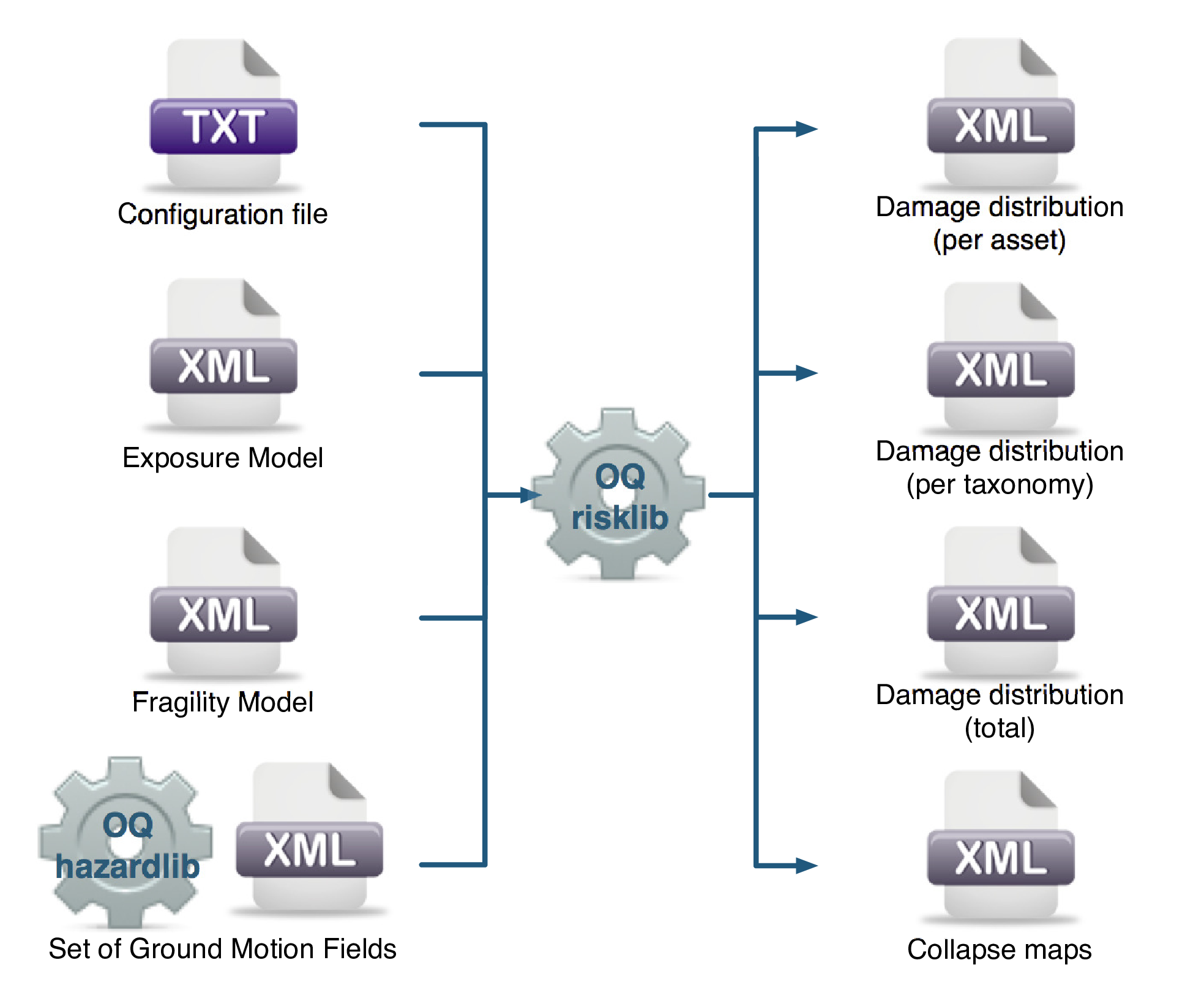

The required input files required for running a scenario damage calculation and the resulting output files are depicted in Fig. 3.1.

Fig. 3.1 Scenario Damage Calculator input/output structure.#

Consequence Model files can also be provided as inputs for a scenario damage calculation in addition to fragilitymodels files, in order to estimate consequences based on the calculated damage distribution. The user may provide one Consequence Model file corresponding to each loss type (amongst structural, nonstructural, contents, and business interruption) for which a Fragility Model file is provided. Whereas providing a Fragility Model file for at least one loss type is mandatory for running a Scenario Damage calculation, providing corresponding Consequence Model files is optional.

3.1.2. Scenario Risk Assessment#

The scenario risk calculator computes loss statistics for all assets in a given Exposure Model for a single specified rupture. Loss statistics include the mean and standard deviation of ground-up losses for each loss type considered in the analysis. Loss statistics can currently be computed for five different loss types using this calculator: structural losses, nonstructural losses, contents losses, downtime losses, and occupant fatalities. This calculator requires the definition of a finite Rupture Model, an Exposure Model and a Vulnerability Model for each loss type considered; the main results are the loss statistics per asset and mean loss maps.

The rupture characteristics—i.e. the magnitude, hypocenter and fault geometry—are modelled as deterministic in the scenario calculators. Multiple simulations of different possible Ground Motion Fields due to the single rupture are generated, taking into consideration both the inter-event variability of ground motions, and the intra-event residuals obtained from a spatial correlation model for ground motion residuals. The use of logic trees allows for the consideration of uncertainty in the choice of a ground motion model for the given tectonic region.

As an alternative to computing the Ground Motion Fields with OpenQuake, users can also provide their own sets of Ground Motion Fields as input to the scenario risk calculator.

For each Ground Motion Field simulation, a loss ratio is sampled for every asset in the Exposure Model using the provided probabilistic Vulnerability Model taking into consideration the correlation model for vulnerability of different assets of a given taxonomy. Finally loss statistics, i.e., the mean loss and standard deviation of loss for ground-up losses across all simulations, are calculated for each asset. Mean loss maps are also generated by this calculator, describing the mean ground-up losses caused by the scenario event for the different assets in the Exposure Model.

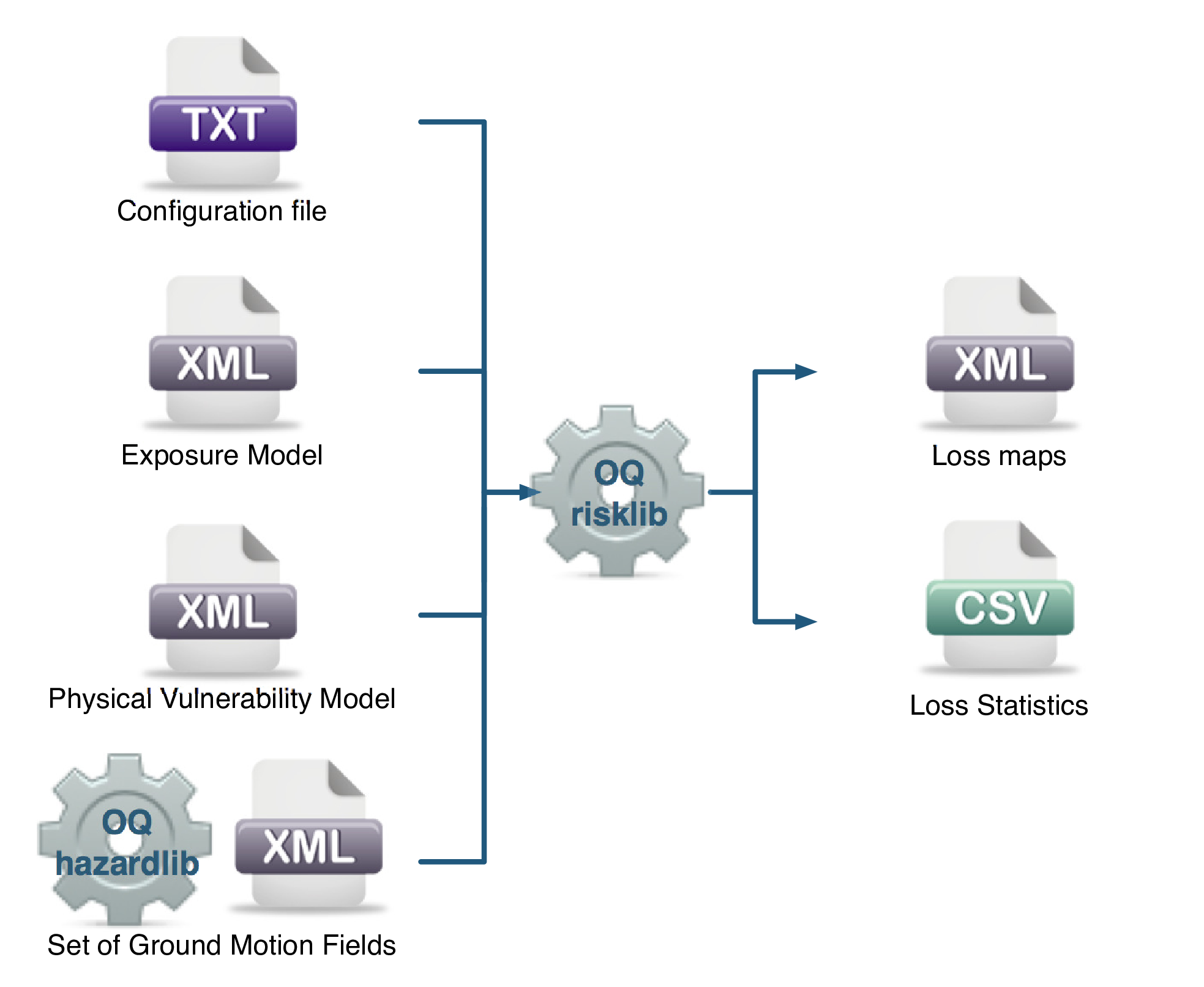

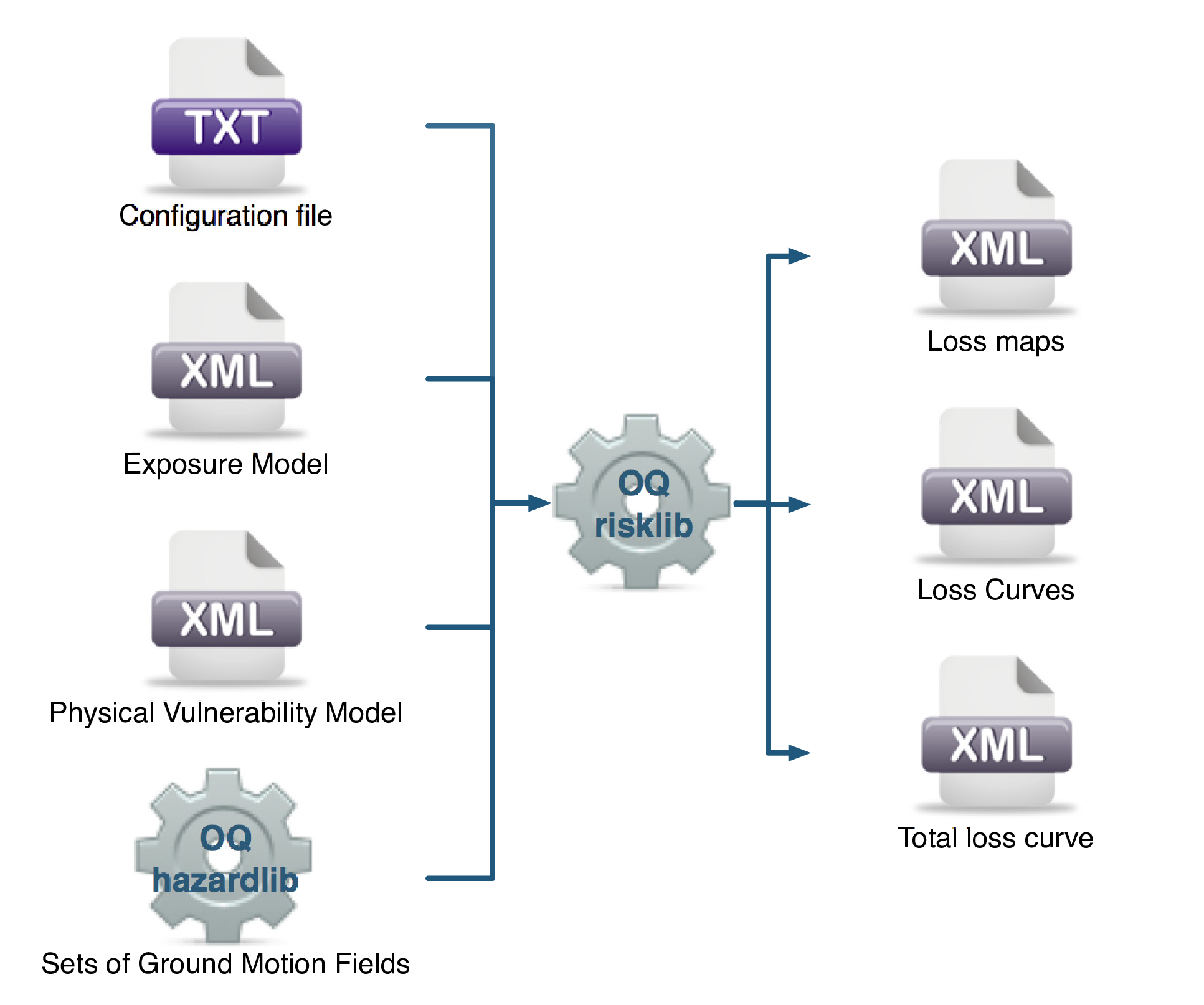

The required input files required for running a scenario risk calculation and the resulting output files are depicted in Fig. 3.2.

Fig. 3.2 Scenario Risk Calculator input/output structure.#

3.1.3. Classical Probabilistic Seismic Damage Analysis#

The classical PSHA-based damage calculator integrates the fragility functions for an asset with the seismic hazard curve at the location of the asset, to give the expected damage distribution for the asset within a specified time period. The calculator requires the definition of an Exposure Model, a Fragility Model with fragilityfunctions for each taxonomy represented in the Exposure Model, and hazard curves calculated in the region of interest. The main results of this calculator are the expected damage distribution for each asset, which describe the probability of the asset being in different damage states, and collapse maps for the region, which describe the probability of collapse for different assets in the portfolio over the specified time period. Damage distribution aggregated by taxonomy or of the total portfolio (considering all assets in the Exposure Model) can not be extracted using this calculator, as the spatial correlation of the ground motion residuals is not taken into consideration.

The hazard curves required for this calculator can be calculated by the OpenQuake engine for all asset locations in the Exposure Model using the classical PSHA approach (Cornell 1968; McGuire 1976).

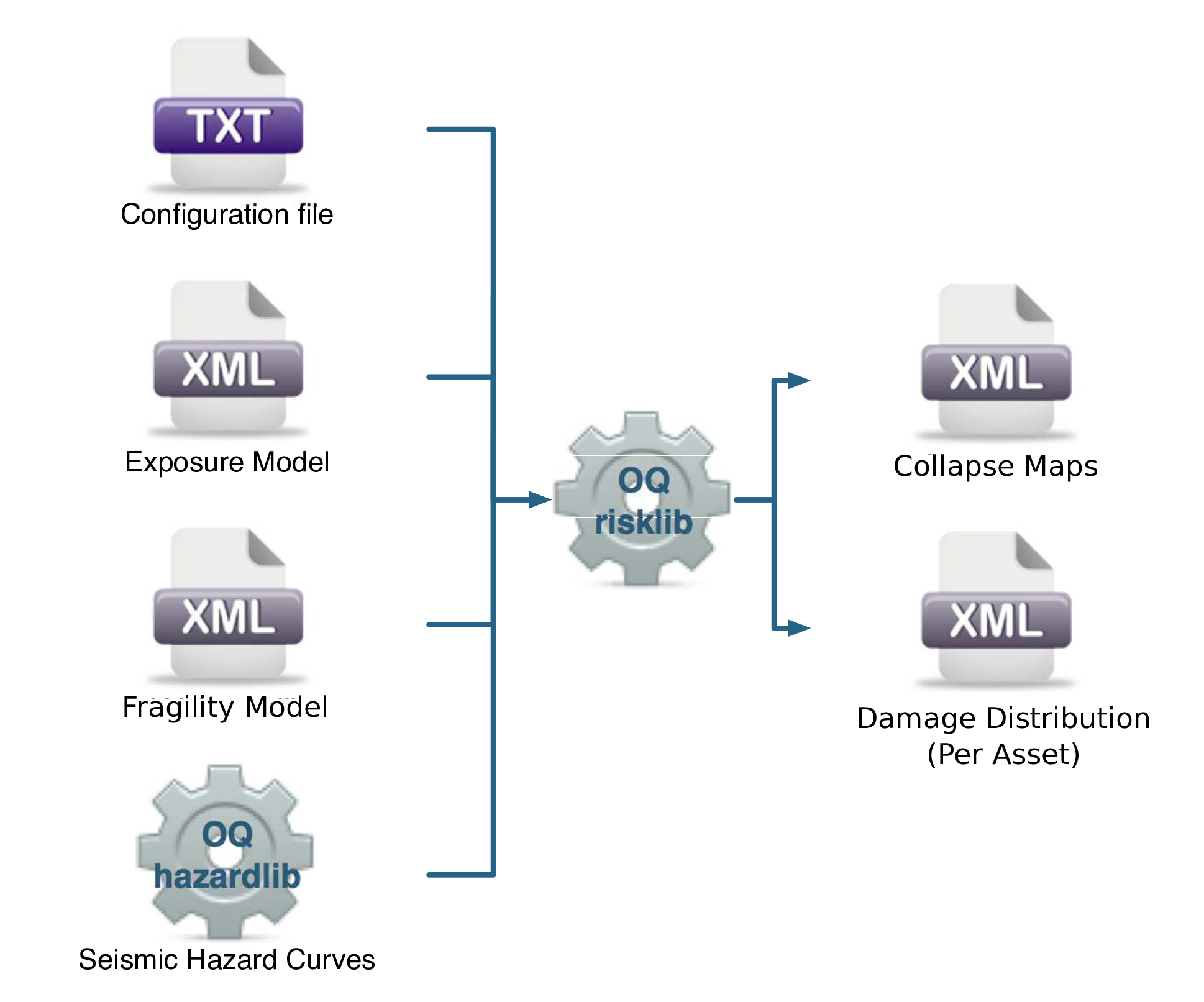

The required input files required for running a classical probabilistic damage calculation and the resulting output files are depicted in Fig. 3.3.

Fig. 3.3 Classical PSHA-based Damage Calculator input/output structure.#

3.1.4. Classical Probabilistic Seismic Risk Analysis#

The classical PSHA-based risk calculator convolves through numerical integration, the probabilistic vulnerability functions for an asset with the seismic hazard curve at the location of the asset, to give the loss distribution for the asset within a specified time period. The calculator requires the definition of an Exposure Model, a Vulnerability Model for each loss type of interest with vulnerabilityfunctions for each taxonomy represented in the Exposure Model, and hazard curves calculated in the region of interest. Loss curves and loss maps can currently be calculated for five different loss types using this calculator: structural losses, nonstructural losses, contents losses, downtime losses, and occupant fatalities. The main results of this calculator are loss exceedance curves for each asset, which describe the probability of exceedance of different loss levels over the specified time period, and loss maps for the region, which describe the loss values that have a given probability of exceedance over the specified time

Unlike the probabilistic event-based risk calculator, an aggregate loss curve (considering all assets in the Exposure Model) can not be extracted using this calculator, as the correlation of the ground motion residuals and vulnerability uncertainty is not taken into consideration in this calculator.

The hazard curves required for this calculator can be calculated by the OpenQuake engine for all asset locations in the Exposure Model using the classical PSHA approach (Cornell 1968; McGuire 1976). The use of logic- trees allows for the consideration of model uncertainty in the choice of a ground motion prediction equation for the different tectonic region types in the region. Unlike what was described in the previous calculator, a total loss curve (considering all assets in the Exposure Model) can not be extracted using this calculator, as the correlation of the ground motion residuals and vulnerability uncertainty is not taken into consideration.

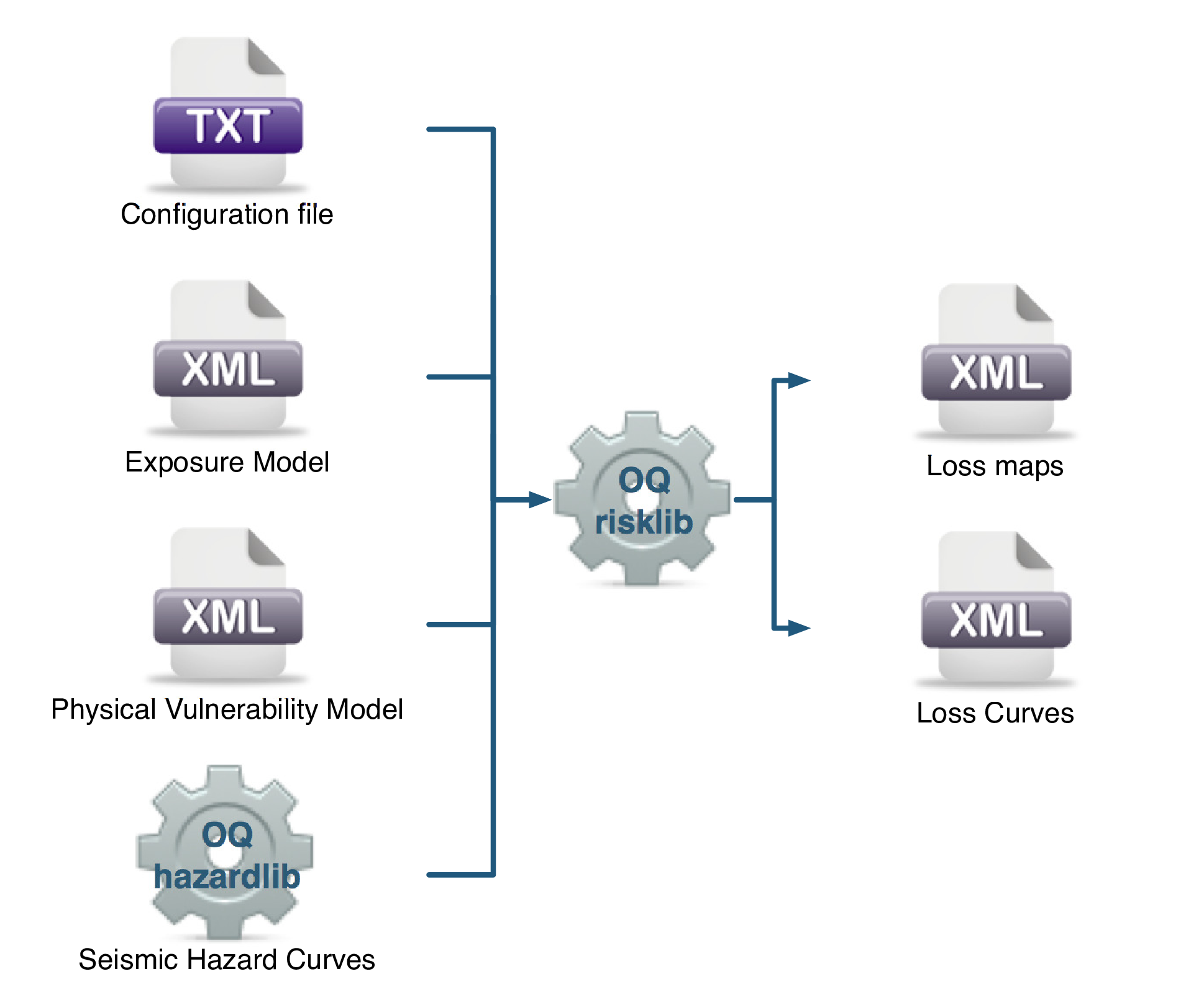

The required input files required for running a classical probabilistic risk calculation and the resulting output files are depicted in Fig. 3.4.

Fig. 3.4 Classical PSHA-based Risk Calculator input/output structure.#

3.1.5. Stochastic Event Based Probabilistic Seismic Damage Analysis#

This calculator employs an event-based Monte Carlo simulation approach to probabilistic damage assessment in order to estimate the damage distribution for individual assets and aggregated damage distribution for a spatially distributed portfolio of assets within a specified time period. The calculator requires the definition of an Exposure Model, a Fragility Model for each loss type of interest with fragilityfunctions for each damage state for every typology represented in the Exposure Model, and a Stochastic Event Set representative of the seismicity of the region over the specified time period. Damage state curves and damage maps corresponding to specified return periods can also be obtained using this calculator.

As an alternative to computing the Ground Motion Fields with OpenQuake engine, users can also provide their own sets of Ground Motion Fields as input to the event-based damage calculator.

The main results of this calculator are the event damage tables; these tables describe the total number of buildings in each damage state for the portfolio of assets for each seismic event in the Stochastic Event Set.

Asset-level event damage tables are generated by the calculator, but are not exportable in csv format due to the large file sizes that may be involved. Interested users can access the asset-level event damage tables within the datastore for the completed calculation.

This calculator relies on the probabilistic event-based hazard calculator, which simulates the seismicity of the chosen time period \(T\) by producing a Stochastic Event Set. For each rupture generated by a Seismic Source, the number of occurrences in the given time span \(T\) is simulated by sampling the corresponding probability distribution as given by \(P_{rup}(k | T)\). A Stochastic Event Set is therefore a sample of the full population of ruptures as defined by a Seismic Source Model. Each rupture is present zero, one or more times, depending on its probability. Symbolically, we can define a Stochastic Event Set as:

where \(k\), the number of occurrences, is a random sample of \(P_{rup}(k | T)\), and \(k \times rup\) means that rupture \(rup\) is repeated \(k\) times in the Stochastic Event Set.

For each rupture or event in the Stochastic Event Sets, a spatially correlated Ground Motion Field realisation is generated, taking into consideration both the inter-event variability of ground motions, and the intra-event residuals obtained from a spatial correlation model for ground motion residuals (if one is specified in the job file). The use of logic trees allows for the consideration of uncertainty in the choice of a Seismic Source Model, and in the choice of groundmotionmodels for the different tectonic regions.

For each Ground Motion Field realization, a damage state is siumulated for each building of every asset in the Exposure Model using the provided Fragility Model. The asset-level event damage table is saved to the datastore. Time-averaged damage distributions at the asset-level can be obtained from the event damage table. Finally damage state exceedance curves can be computed.

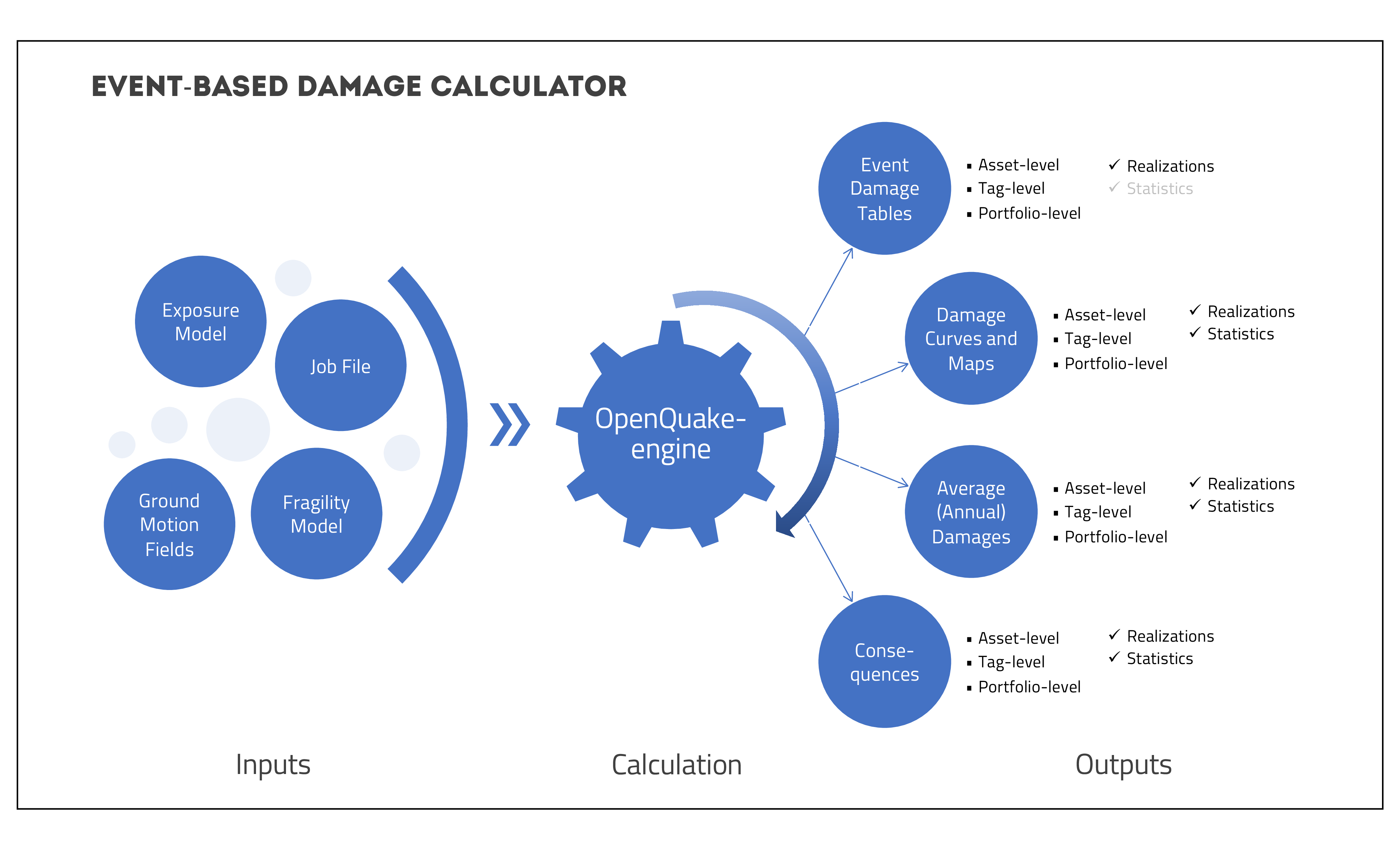

The required input files required for running a probabilistic stochastic event-based damage calculation and the resulting output files are depicted in Fig. 3.5.

Fig. 3.5 Probabilistic Event-based Damage Calculator input/output structure.#

Similar to the scenario damage calculator, Consequence Model files can also be provided as inputs for an event-based damage calculation in addition to fragilitymodels files, in order to estimate consequences based on the calculated damage distribution. The user may provide one Consequence Model file corresponding to each loss type (amongst structural, nonstructural, contents, and business interruption) for which a Fragility Model file is provided. Whereas providing a Fragility Model file for at least one loss type is mandatory for running an Event-Based Damage calculation, providing corresponding Consequence Model files is optional.

3.1.6. Stochastic Event Based Probabilistic Seismic Risk Analysis#

This calculator employs an event-based Monte Carlo simulation approach to probabilistic risk assessment in order to estimate the loss distribution for individual assets and aggregated loss distribution for a spatially distributed portfolio of assets within a specified time period. The calculator requires the definition of an Exposure Model, a Vulnerability Model for each loss type of interest with vulnerabilityfunctions for each taxonomy represented in the Exposure Model, and a Stochastic Event Set (also known as a synthetic catalog) representative of the seismicity of the region over the specified time period. Loss curves and loss maps can currently be calculated for five different loss types using this calculator: structural losses, nonstructural losses, contents losses, downtime losses, and occupant fatalities.

As an alternative to computing the Ground Motion Fields with OpenQuake engine, users can also provide their own sets of Ground Motion Fields as input to the event-based risk calculator, starting from OpenQuake engine28.

The main results of this calculator are loss exceedance curves for each asset, which describe the probability of exceedance of different loss levels over the specified time period, and loss maps for the region, which describe the loss values that have a given probability of exceedance over the specified time period. Aggregate loss exceedance curves can also be produced using this calculator; these describe the probability of exceedance of different loss levels for all assets in the portfolio. Finally, event loss tables can be produced using this calculator; these tables describe the total loss across the portfolio for each seismic event in the Stochastic Event Set.

This calculator relies on the probabilistic event-based hazard calculator, which simulates the seismicity of the chosen time period \(T\) by producing a Stochastic Event Set. For each rupture generated by a Seismic Source, the number of occurrences in the given time span \(T\) is simulated by sampling the corresponding probability distribution as given by \(P_{rup}(k | T)\). A Stochastic Event Set is therefore a sample of the full population of ruptures as defined by a Seismic Source Model. Each rupture is present zero, one or more times, depending on its probability. Symbolically, we can define a Stochastic Event Set as:

where \(k\), the number of occurrences, is a random sample of \(P_{rup}(k | T)\), and \(k \times rup\) means that rupture \(rup\) is repeated \(k\) times in the Stochastic Event Set.

For each rupture or event in the Stochastic Event Sets, a spatially correlated Ground Motion Field realisation is generated, taking into consideration both the inter-event variability of ground motions, and the intra-event residuals obtained from a spatial correlation model for ground motion residuals (if one is specified in the job file). The use of logic trees allows for the consideration of uncertainty in the choice of a Seismic Source Model, and in the choice of groundmotionmodels for the different tectonic regions.

For each Ground Motion Field realization, a loss ratio is sampled for every asset in the Exposure Model using the provided probabilistic Vulnerability Model, taking into consideration the correlation model for vulnerability of different assets of a given taxonomy. Finally loss exceedance curves are computed for ground-up losses.

The required input files required for running a probabilistic stochastic event-based risk calculation and the resulting output files are depicted in Fig. 3.6.

Fig. 3.6 Probabilistic Event-based Risk Calculator input/output structure.#

3.1.7. Retrofit Benefit-Cost Ratio Analysis#

This calculator represents a decision-support tool for deciding whether the employment of retrofitting measures to a collection of existing buildings is advantageous from an economical point of view. For this assessment, the expected losses considering the original and retrofitted configuration of the buildings are estimated, and the economic benefit due to the better seismic design is divided by the retrofitting cost, leading to the benefit/cost ratio. These loss curves are computed using the previously described Classical PSHA- based Risk calculator. The output of this calculator is a benefit/cost ratio for each asset, in which a ratio above one indicates that employing a retrofitting intervention is economically viable.

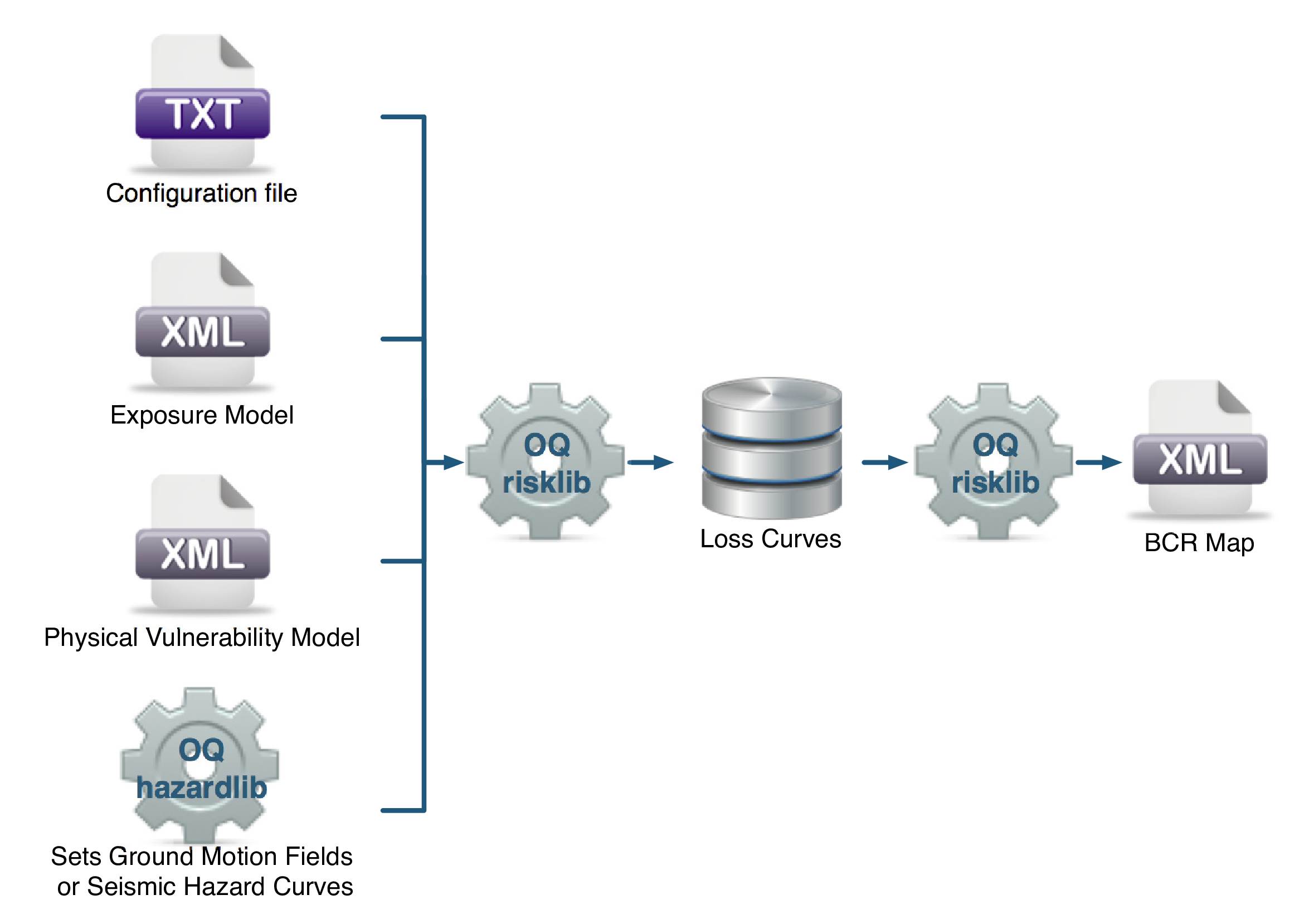

In Fig. 3.7, the input/output structure for this calculator is depicted.

Fig. 3.7 Retrofitting Benefit/Cost Ratio Calculator input/output structure.#

For further information regarding the theoretical background of the methodologies used for each calculator, users are referred to the OpenQuake- engine Book (Risk).

3.1.8. Infrastructure Risk Analysis#

3.1.8.1. Rationale#

Critical infrastructures, whose components are interconnected and behave as a system, are vital for the functioning of the society. Unlike the portfolio of the buildings whose total loss can be calculated by simply aggregating the losses from the individual component unit, it is not straightforward for critical infrastructures as they possess the complex network property to behave synergistically.

Risk assessment of critical infrastructures is limited due to the underlying complexities and lack of integrated tools that can compute from hazard characterization to risk assessment at system level. Therefore, in order to aid the assessment of infrastructures extensively and globally, this implementation has been carried out.

As of now, risk assessment at connenctivity level using topology based network analysis has been carried out. This can be uniformly used for different types of critical infrastructures including water supply systems, electric power networks, transporation systems, natural gas, etc.

3.1.8.2. Specification of the Input Model#

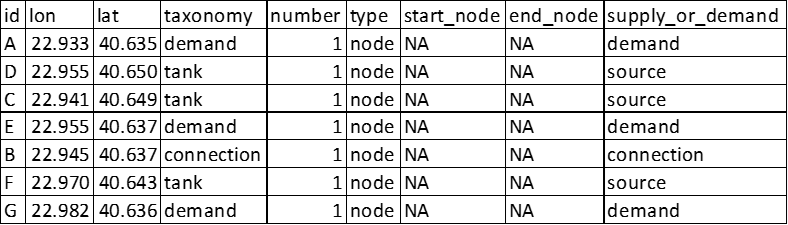

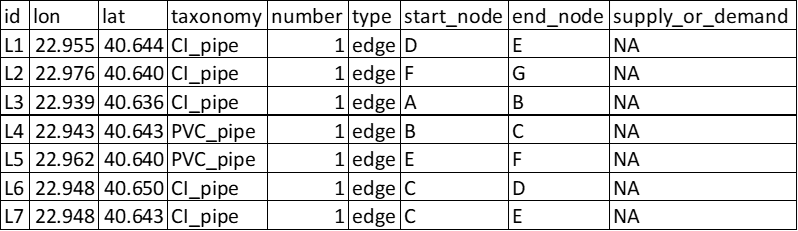

Critical infrastructures are represented as graph-like components consisting of nodes/vertices connected by edges/links. The graph can be simple undirected, directed, multi or multidirected type. To each of these types, the graph can be weighted or unweighted. If not specified, the default is simple unweighted undirected graph. In order to generate the graphs, the adjustment should be made in the exposure.csv file. Additional to the usual information, it should also include the columns type, start_node, end_node, demand_or_supply.

A snippet of exposure model for nodes and edges for a simple unweighted graph is shown in Fig. 3.8 and Fig. 3.9.

Fig. 3.8 Example of the exposure model of nodes of the infrastructure#

Fig. 3.9 Example of the exposure model of edges of the infrastructure#

If the weights are to be added, there should be a column name weights, and weights can be travel time, distance, importance factor, etc., according to the user requirement.

Note: If weight is not present, it assigns 1 as weight to every edge while calculating Weighted Connectivity Loss(WCL) and Efficiency Loss(EL)

If the user wants to specify the graph type, another column graphtype must be added. It can be either “directed”, “multi”, “multidirected” or “simple”.

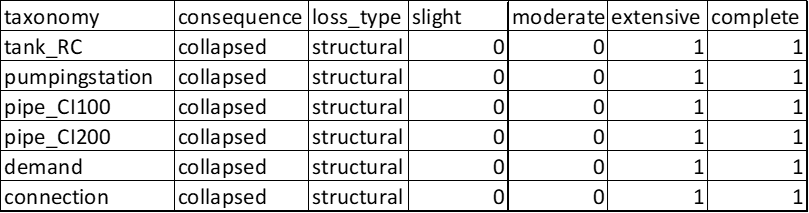

Hazard model and fragility model are similar to other comuptations. In order to do the network analysis, it is important to define if each component is functional/operational or not, which is defined by the damage states. For this, an additional consequence model is necessary (see Fig. 3.10). As of now, only the binary state is considered i.e “functional/operational” or “non-functional/non-operational”.

Fig. 3.10 Example of the consequence model (Note: 0 implies still operational and 1 implies non-operational)#

3.1.8.3. Calculation Steps#

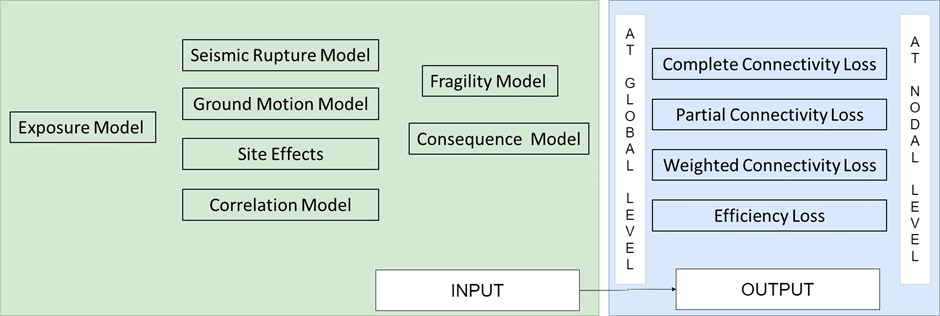

Preparation of input models, which includes:

Exposure model

Hazard model

Fragility model

Consequence model

Computation of the specific damage states and assignment of functionality/operationality to each component

Creation of original network from the exposure model

Update of the functionality of each component to the originally created infrastructure network and removal of the non-functional/non-operational components

Network analysis based on the original and updated infrastructure network

Computation of the following performance metrics, based on connectivity analysis:

Complete Connectivity Loss (CCL)

Partial Connectivity Loss (PCL)

Weighted Connectivity Loss (WCL)

Efficiency Loss (EL)

In summary, for the computation of infrastructure risk at connectivity level in OpenQuake, the input requirements and the outputs obtained are shown in Fig. 3.11.

Fig. 3.11 Input models required, and output obtained in OpenQuake for infrastructure risk assessment at connectivity level#

3.1.8.4. Specification of the Outputs Obtained#

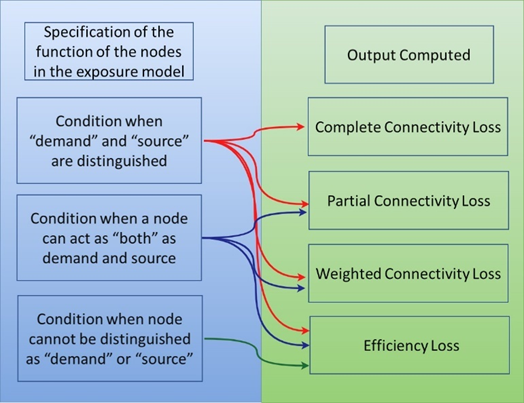

For the classic generic case where “demand” and “supply” are explicity mentioned in the column demand_or_supply, all four metrics are computed for overall network. Also, at nodal level, all the four metrics are calculated.

If some nodes behave as both supply and demand, and are assigned as “both” or “TAZ” (traffic analysis zone) in the column demand_or_supply, PCL, WCL and EL are computed for both nodal level and overall network.

If there is no assignment of “demand” or “supply” to the column demand_or_supply, only EL is computed for overall network. Also, at nodal level, for all the nodes, EL only is computed.

For the simplification, Fig. 3.12 has been added to understand what can be obtained by various specifications.

Fig. 3.12 Output computed from the implementation according to the specification of the function of the nodes#

Mainly, the library, NetworkX (Aric et al. 2008) has been used during the implementation. Further details can be found in Poudel et al. 2023. Also, much concept during the implementation has been drawn from Pitilakis et al. 2014.

3.1.8.5. References#

Aric A. Hagberg, Daniel A. Schult and Pieter J. Swart (2008) Exploring network structure, dynamics, and function using NetworkX, in Proceedings of the 7th Python in Science Conference (SciPy2008), Gäel Varoquaux, Travis Vaught, and Jarrod Millman (Eds), (Pasadena, CA USA), pp. 11–15

Poudel, A., Pitilakis, K., Silva, V. and Rao, A., (2023). Infrastructure seismic risk assessment: an overview and integration to contemporary open tool towards global usage. Bulletin of Earthquake Engineering. DOI: https://doi.org/10.1007/s10518-023-01693-z

Pitilakis, K, Franchin P, Khazai B, & Wenzel H, (Eds.) (2014) SYNER-G: systemic seismic vulnerability and risk assessment of complex urban, utility, lifeline systems and critical facilities: methodology and applications (Vol. 31), Springer, DOI: https://doi.org/10.1007/978-94-017-8835-9

3.1.8.6. Acknowledgements#

The present work has been done in the framework of grant agreement No. 813137 funded by the European Commission ITN-Marie Sklodowska-Curie project “New Challenges for Urban Engineering Seismology (URBASIS-EU)” by Astha Poudel, ESR 4.5 (Aristotle University of Thessaloniki, Université Grenoble Alpes) with the support from Kyriazis Pitilakis, Vitor Silva, Anirudh Rao, and Michele Simionato. Also, we would like to acknowledge the contributors of the SYNER-G project that was funded from the European Community’s 7th Framework Program under grant No. 244061 from which many conceptual frameworks have been built upon.

3.2. Risk Input Models#

The following sections describe the basic inputs required for a risk calculation, including exposuremodels, fragilitymodels, consequencemodels, and vulnerabilitymodels. In addition, each risk calculator also requires the appropriate hazard inputs computed in the region of interest. Hazard inputs include hazard curves for the classical probabilistic damage and risk calculators, Ground Motion Field for the scenario damage and risk calculators, or Stochastic Event Sets for the probabilistic event based calculators.

3.2.1. Exposure Models#

All risk calculators in the OpenQuake engine require an Exposure Model that needs to be provided in the Natural hazards’ Risk Markup Language schema, the use of which is illustrated through several examples in this section. The information included in an Exposure Model comprises a metadata section listing general information about the exposure, followed by a cost conversions section that describes how the different areas, costs, and occupancies for the assets will be specified, followed by data regarding each individual asset in the portfolio.

Note: Starting from OpenQuake engine30, the Exposure Model may be provided using csv files listing the asset information, along with an xml file conatining the metadata section for the exposure model that has been described in the examples above. See Example 8 below for an illustration of an exposure model using csv files.

A simple Exposure Model comprising a single asset is shown in the listing below.

1<?xml version="1.0" encoding="UTF-8"?>

2<nrml xmlns:gml="http://www.opengis.net/gml"

3 xmlns="http://openquake.org/xmlns/nrml/0.5">

4

5<exposureModel id="exposure_example"

6 category="buildings"

7 taxonomySource="GEM_Building_Taxonomy_2.0">

8 <description>Exposure Model Example</description>

9

10 <conversions>

11 <costTypes>

12 <costType name="structural" type="per_area" unit="USD" />

13 </costTypes>

14 <area type="per_asset" unit="SQM" />

15 </conversions>

16

17 <assets>

18 <asset id="a1" taxonomy="Adobe" number="5" area="100" >

19 <location lon="-122.000" lat="38.113" />

20 <costs>

21 <cost type="structural" value="10000" />

22 </costs>

23 <occupancies>

24 <occupancy occupants="20" period="day" />

25 </occupancies>

26 </asset>

27 </assets>

28

29</exposureModel>

30

31</nrml>

Let us take a look at each of the sections in the above example file in turn. The first part of the file contains the metadata section:

1<?xml version="1.0" encoding="UTF-8"?>

2<nrml xmlns:gml="http://www.opengis.net/gml"

3 xmlns="http://openquake.org/xmlns/nrml/0.5">

4

5<exposureModel id="exposure_example"

6 category="buildings"

7 taxonomySource="GEM_Building_Taxonomy_2.0">

8 <description>Exposure Model Example</description>

9

10 <conversions>

11 <costTypes>

12 <costType name="structural" type="per_area" unit="USD" />

13 </costTypes>

14 <area type="per_asset" unit="SQM" />

15 </conversions>

16

17 <assets>

18 <asset id="a1" taxonomy="Adobe" number="5" area="100" >

19 <location lon="-122.000" lat="38.113" />

20 <costs>

21 <cost type="structural" value="10000" />

22 </costs>

23 <occupancies>

24 <occupancy occupants="20" period="day" />

25 </occupancies>

26 </asset>

27 </assets>

28

29</exposureModel>

30

31</nrml>

The information in the metadata section is common to all of the assets in the portfolio and needs to be incorporated at the beginning of every Exposure Model file. There are a number of parameters that compose the metadata section, which is intended to provide general information regarding the assets within the Exposure Model. These parameters are described below:

id: mandatory; a unique string used to identify the Exposure Model. This string can contain letters (a–z; A–Z), numbers (0–9), dashes (–), and underscores (_), with a maximum of 100 characters.category: an optional string used to define the type of assets being stored (e.g: buildings, lifelines).taxonomySource: an optional attribute used to define the taxonomy being used to classify the assets.description: mandatory; a brief string (ASCII) with further information about the Exposure Model.

Next, let us look at the part of the file describing the area and cost conversions:

1<?xml version="1.0" encoding="UTF-8"?>

2<nrml xmlns:gml="http://www.opengis.net/gml"

3 xmlns="http://openquake.org/xmlns/nrml/0.5">

4

5<exposureModel id="exposure_example"

6 category="buildings"

7 taxonomySource="GEM_Building_Taxonomy_2.0">

8 <description>Exposure Model Example</description>

9

10 <conversions>

11 <costTypes>

12 <costType name="structural" type="per_area" unit="USD" />

13 </costTypes>

14 <area type="per_asset" unit="SQM" />

15 </conversions>

16

17 <assets>

18 <asset id="a1" taxonomy="Adobe" number="5" area="100" >

19 <location lon="-122.000" lat="38.113" />

20 <costs>

21 <cost type="structural" value="10000" />

22 </costs>

23 <occupancies>

24 <occupancy occupants="20" period="day" />

25 </occupancies>

26 </asset>

27 </assets>

28

29</exposureModel>

30

31</nrml>

Notice that the costType element defines a name, a type, and

a unit attribute.

The Natural hazards’ Risk Markup Language schema for the Exposure Model allows the definition of a

structural cost, a nonstructural components cost, a contents cost, and a

business interruption or downtime cost for each asset in the portfolio.

Thus, the valid values for the name attribute of the costType

element are the following:

structural: used to specify the structural replacement cost of assetsnonstructural: used to specify the replacement cost for the nonstructural components of assetscontents: used to specify the contents replacement costbusiness_interruption: used to specify the cost that will be incurred per unit time that a damaged asset remains closed following an earthquake

The Exposure Model shown in the example above defines only the structural values for the assets. However, multiple cost types can be defined for each asset in the same Exposure Model.

The unit attribute of the costType element is used for

specifying the currency unit for the corresponding cost type. Note that

the OpenQuake engine itself is agnostic to the currency units; the unit is

thus a descriptive attribute which is used by the OpenQuake engine to annotate

the results of a risk assessment. This attribute can be set to any valid

Unicode string.

The type attribute of the costType element specifies whether the

costs will be provided as an aggregated value for an asset, or per

building or unit comprising an asset, or per unit area of an asset. The

valid values for the type attribute of the costType element are

the following:

aggregated: indicates that the replacement costs will be provided as an aggregated value for each assetper_asset: indicates that the replacement costs will be provided per structural unit comprising each assetper_area: indicates that the replacement costs will be provided per unit area for each asset

If the costs are to be specified per_area for any of the

costTypes, the area element will also need to be defined in the

conversions section. The area element defines a type, and a

unit attribute.

The unit attribute of the area element is used for specifying

the units for the area of an asset. The OpenQuake engine itself is agnostic to

the area units; the unit is thus a descriptive attribute which is

used by the OpenQuake engine to annotate the results of a risk assessment. This

attribute can be set to any valid ASCII string.

The type attribute of the area element specifies whether the

area will be provided as an aggregated value for an asset, or per

building or unit comprising an asset. The valid values for the type

attribute of the area element are the following:

aggregated: indicates that the area will be provided as an aggregated value for each assetper_asset: indicates that the area will be provided per building or unit comprising each asset

The way the information about the characteristics of the assets in an Exposure Model are stored can vary strongly depending on how and why the data was compiled. As an example, if national census information is used to estimated the distribution of assets in a given region, it is likely that the number of buildings within a given geographical area will be used to define the dataset, and will be used for estimating the number of collapsed buildings for a scenario earthquake. On the other hand, if simplified methodologies based on proxy data such as population distribution are used to develop the Exposure Model, then it is likely that the built up area or economic cost of each building typology will be directly derived, and will be used for the estimation of economic losses.

Finally, let us look at the part of the file describing the set of assets in the portfolio to be used in seismic damage or risk calculations:

1<?xml version="1.0" encoding="UTF-8"?>

2<nrml xmlns:gml="http://www.opengis.net/gml"

3 xmlns="http://openquake.org/xmlns/nrml/0.5">

4

5<exposureModel id="exposure_example"

6 category="buildings"

7 taxonomySource="GEM_Building_Taxonomy_2.0">

8 <description>Exposure Model Example</description>

9

10 <conversions>

11 <costTypes>

12 <costType name="structural" type="per_area" unit="USD" />

13 </costTypes>

14 <area type="per_asset" unit="SQM" />

15 </conversions>

16

17 <assets>

18 <asset id="a1" taxonomy="Adobe" number="5" area="100" >

19 <location lon="-122.000" lat="38.113" />

20 <costs>

21 <cost type="structural" value="10000" />

22 </costs>

23 <occupancies>

24 <occupancy occupants="20" period="day" />

25 </occupancies>

26 </asset>

27 </assets>

28

29</exposureModel>

30

31</nrml>

Each asset definition involves specifiying a set of mandatory and optional attributes concerning the asset. The following set of attributes can be assigned to each asset based on the current schema for the Exposure Model:

id: mandatory; a unique string used to identify the given asset, which is used by the OpenQuake engine to relate each asset with its associated results. This string can contain letters (a–z; A–Z), numbers (0–9), dashes (-), and underscores (_), with a maximum of 100 characters.taxonomy: mandatory; this string specifies the building typology of the given asset. The taxonomy strings can be user-defined, or based on an existing classification scheme such as the GEM Taxonomy, PAGER, or EMS-98.number: the number of individual structural units comprising a given asset. This attribute is mandatory for damage calculations. For risk calculations, this attribute must be defined if either the area or any of the costs are provided per structural unit comprising each asset.area: area of the asset, at a given location. As mentioned earlier, the area is a mandatory attribute only if any one of the costs for the asset is specified per unit area.location: mandatory; specifies the longitude (between -180\(^{\circ}\) to 180\(^{\circ}\)) and latitude (between -90\(^{\circ}\) to 90 \(^{\circ}\)) of the given asset, both specified in decimal degrees [2].costs: specifies a set of costs for the given asset. The replacement value for different cost types must be provided on separate lines within thecostselement. As shown in the example above, each cost entry must define thetypeand thevalue. Currently supported valid options for the costtypeare:structural,nonstructural,contents, andbusiness_interruption.occupancies: mandatory only for probabilistic or scenario risk calculations that specify anoccupants_vulnerability_file. Each entry within this element specifies the number of occupants for the asset for a particular period of the day. As shown in the example above, each occupancy entry must define theperiodand theoccupants. Currently supported valid options for theperiodare:day,transit, andnight. Currently, the number ofoccupantsfor an asset can only be provided as an aggregated value for the asset.

For the purposes of performing a retrofitting benefit/cost analysis, it

is also necessary to define the retrofitting cost (retrofitted). The

combination between the possible options in which these three attributes

can be defined leads to four ways of storing the information about the

assets. For each of these cases a brief explanation and example is

provided in this section.

Example 1

This example illustrates an Exposure Model in which the aggregated cost (structural, nonstructural, contents and business interruption) of the assets of each taxonomy for a set of locations is directly provided. Thus, in order to indicate how the various costs will be defined, the following information needs to be stored in the Exposure Model file, as shown in the listing below.

1<?xml version="1.0" encoding="UTF-8"?>

2<nrml xmlns:gml="http://www.opengis.net/gml"

3 xmlns="http://openquake.org/xmlns/nrml/0.5">

4

5<exposureModel id="exposure_example"

6 category="buildings"

7 taxonomySource="GEM_Building_Taxonomy_2.0">

8 <description>

9 Exposure model with aggregated replacement costs for each asset

10 </description>

11 <conversions>

12 <costTypes>

13 <costType name="structural" type="aggregated" unit="USD" />

14 <costType name="nonstructural" type="aggregated" unit="USD" />

15 <costType name="contents" type="aggregated" unit="USD" />

16 <costType name="business_interruption" type="aggregated" unit="USD/month"/>

17 </costTypes>

18 </conversions>

19 <assets>

20 <asset id="a1" taxonomy="Adobe" >

21 <location lon="-122.000" lat="38.113" />

22 <costs>

23 <cost type="structural" value="20000" />

24 <cost type="nonstructural" value="30000" />

25 <cost type="contents" value="10000" />

26 <cost type="business_interruption" value="4000" />

27 </costs>

28 </asset>

29 </assets>

30</exposureModel>

31

32</nrml>

In this case, the cost type of each component as been defined as

aggregated. Once the way in which each cost is going to be defined

has been established, the values for each asset can be stored according

to the format shown in

the listing.

1<?xml version="1.0" encoding="UTF-8"?>

2<nrml xmlns:gml="http://www.opengis.net/gml"

3 xmlns="http://openquake.org/xmlns/nrml/0.5">

4

5<exposureModel id="exposure_example"

6 category="buildings"

7 taxonomySource="GEM_Building_Taxonomy_2.0">

8 <description>

9 Exposure model with aggregated replacement costs for each asset

10 </description>

11 <conversions>

12 <costTypes>

13 <costType name="structural" type="aggregated" unit="USD" />

14 <costType name="nonstructural" type="aggregated" unit="USD" />

15 <costType name="contents" type="aggregated" unit="USD" />

16 <costType name="business_interruption" type="aggregated" unit="USD/month"/>

17 </costTypes>

18 </conversions>

19 <assets>

20 <asset id="a1" taxonomy="Adobe" >

21 <location lon="-122.000" lat="38.113" />

22 <costs>

23 <cost type="structural" value="20000" />

24 <cost type="nonstructural" value="30000" />

25 <cost type="contents" value="10000" />

26 <cost type="business_interruption" value="4000" />

27 </costs>

28 </asset>

29 </assets>

30</exposureModel>

31

32</nrml>

Each asset is uniquely identified by its id. Then, a pair of

coordinates (latitude and longitude) for a location where the asset

is assumed to exist is defined. Each asset must be classified according

to a taxonomy, so that the OpenQuake engine is capable of employing the

appropriate Vulnerability Function or Fragility Function in the risk

calculations. Finally, the cost values of each type are stored

within the costs attribute. In this example, the aggregated value

for all structural units (within a given asset) at each location is

provided directly, so there is no need to define other attributes such

as number or area. This mode of representing an Exposure Model is

probably the simplest one.

Example 2

In the snippet shown in the listing below, an Exposure Model containing the number of structural units and the associated costs per unit of each asset is presented.

1<?xml version="1.0" encoding="UTF-8"?>

2<nrml xmlns:gml="http://www.opengis.net/gml"

3 xmlns="http://openquake.org/xmlns/nrml/0.5">

4

5<exposureModel id="exposure_example"

6 category="buildings"

7 taxonomySource="GEM_Building_Taxonomy_2.0">

8 <description>

9 Exposure model with replacement costs per building for each asset

10 </description>

11 <conversions>

12 <costTypes>

13 <costType name="structural" type="per_asset" unit="USD" />

14 <costType name="nonstructural" type="per_asset" unit="USD" />

15 <costType name="contents" type="per_asset" unit="USD" />

16 <costType name="business_interruption" type="per_asset" unit="USD/month"/>

17 </costTypes>

18 </conversions>

19 <assets>

20 <asset id="a1" number="2" taxonomy="Adobe" >

21 <location lon="-122.000" lat="38.113" />

22 <costs>

23 <cost type="structural" value="7500" />

24 <cost type="nonstructural" value="11250" />

25 <cost type="contents" value="3750" />

26 <cost type="business_interruption" value="1500" />

27 </costs>

28 </asset>

29 </assets>

30</exposureModel>

31

32</nrml>

For this case, the cost type has been set to per_asset. Then,

the information from each asset can be stored following the format shown

in

the listing below.

1<?xml version="1.0" encoding="UTF-8"?>

2<nrml xmlns:gml="http://www.opengis.net/gml"

3 xmlns="http://openquake.org/xmlns/nrml/0.5">

4

5<exposureModel id="exposure_example"

6 category="buildings"

7 taxonomySource="GEM_Building_Taxonomy_2.0">

8 <description>

9 Exposure model with replacement costs per building for each asset

10 </description>

11 <conversions>

12 <costTypes>

13 <costType name="structural" type="per_asset" unit="USD" />

14 <costType name="nonstructural" type="per_asset" unit="USD" />

15 <costType name="contents" type="per_asset" unit="USD" />

16 <costType name="business_interruption" type="per_asset" unit="USD/month"/>

17 </costTypes>

18 </conversions>

19 <assets>

20 <asset id="a1" number="2" taxonomy="Adobe" >

21 <location lon="-122.000" lat="38.113" />

22 <costs>

23 <cost type="structural" value="7500" />

24 <cost type="nonstructural" value="11250" />

25 <cost type="contents" value="3750" />

26 <cost type="business_interruption" value="1500" />

27 </costs>

28 </asset>

29 </assets>

30</exposureModel>

31

32</nrml>

In this example, the various costs for each asset is not provided

directly, as in the previous example. In order to carry out the risk

calculations in which the economic cost of each asset is provided, the

OpenQuake engine multiplies, for each asset, the number of units (buildings) by

the “per asset” replacement cost. Note that in this case, there is no

need to specify the attribute area.

Example 3

The example shown in the listing below comprises an Exposure Model containing the built up area of each asset, and the associated costs are provided per unit area.

1<?xml version="1.0" encoding="UTF-8"?>

2<nrml xmlns:gml="http://www.opengis.net/gml"

3 xmlns="http://openquake.org/xmlns/nrml/0.5">

4

5<exposureModel id="exposure_example"

6 category="buildings"

7 taxonomySource="GEM_Building_Taxonomy_2.0">

8 <description>

9 Exposure model with replacement costs per unit area;

10 and areas provided as aggregated values for each asset

11 </description>

12 <conversions>

13 <area type="aggregated" unit="SQM" />

14 <costTypes>

15 <costType name="structural" type="per_area" unit="USD" />

16 <costType name="nonstructural" type="per_area" unit="USD" />

17 <costType name="contents" type="per_area" unit="USD" />

18 <costType name="business_interruption" type="per_area" unit="USD/month"/>

19 </costTypes>

20 </conversions>

21 <assets>

22 <asset id="a1" area="1000" taxonomy="Adobe" >

23 <location lon="-122.000" lat="38.113" />

24 <costs>

25 <cost type="structural" value="5" />

26 <cost type="nonstructural" value="7.5" />

27 <cost type="contents" value="2.5" />

28 <cost type="business_interruption" value="1" />

29 </costs>

30 </asset>

31 </assets>

32</exposureModel>

33

34</nrml>

In order to compile an Exposure Model with this structure, the cost

type should be set to per_area. In addition, it is also

necessary to specify if the area that is being store represents the

aggregated area of number of units within an asset, or the average area

of a single unit. In this particular case, the area that is being

stored is the aggregated built up area per asset, and thus this

attribute was set to aggregated.

The listing below

illustrates the definition of the assets for this example.

1<?xml version="1.0" encoding="UTF-8"?>

2<nrml xmlns:gml="http://www.opengis.net/gml"

3 xmlns="http://openquake.org/xmlns/nrml/0.5">

4

5<exposureModel id="exposure_example"

6 category="buildings"

7 taxonomySource="GEM_Building_Taxonomy_2.0">

8 <description>

9 Exposure model with replacement costs per unit area;

10 and areas provided as aggregated values for each asset

11 </description>

12 <conversions>

13 <area type="aggregated" unit="SQM" />

14 <costTypes>

15 <costType name="structural" type="per_area" unit="USD" />

16 <costType name="nonstructural" type="per_area" unit="USD" />

17 <costType name="contents" type="per_area" unit="USD" />

18 <costType name="business_interruption" type="per_area" unit="USD/month"/>

19 </costTypes>

20 </conversions>

21 <assets>

22 <asset id="a1" area="1000" taxonomy="Adobe" >

23 <location lon="-122.000" lat="38.113" />

24 <costs>

25 <cost type="structural" value="5" />

26 <cost type="nonstructural" value="7.5" />

27 <cost type="contents" value="2.5" />

28 <cost type="business_interruption" value="1" />

29 </costs>

30 </asset>

31 </assets>

32</exposureModel>

33

34</nrml>

Once again, the OpenQuake engine needs to carry out some calculations in order to

compute the different costs per asset. In this case, this value is

computed by multiplying the aggregated built up area of each asset

by the associated cost per unit area. Notice that in this case, there is

no need to specify the attribute number.

Example 4

This example demonstrates an Exposure Model that defines the number of structural units for each asset, the average built up area per structural unit and the associated costs per unit area. The listing below shows the metadata definition for an Exposure Model built in this manner.

1<?xml version="1.0" encoding="UTF-8"?>

2<nrml xmlns:gml="http://www.opengis.net/gml"

3 xmlns="http://openquake.org/xmlns/nrml/0.5">

4

5<exposureModel id="exposure_example"

6 category="buildings"

7 taxonomySource="GEM_Building_Taxonomy_2.0">

8 <description>

9 Exposure model with replacement costs per unit area;

10 and areas provided per building for each asset

11 </description>

12 <conversions>

13 <area type="per_asset" unit="SQM" />

14 <costTypes>

15 <costType name="structural" type="per_area" unit="USD" />

16 <costType name="nonstructural" type="per_area" unit="USD" />

17 <costType name="contents" type="per_area" unit="USD" />

18 <costType name="business_interruption" type="per_area" unit="USD/month"/>

19 </costTypes>

20 </conversions>

21 <assets>

22 <asset id="a1" number="3" area="400" taxonomy="Adobe" >

23 <location lon="-122.000" lat="38.113" />

24 <costs>

25 <cost type="structural" value="10" />

26 <cost type="nonstructural" value="15" />

27 <cost type="contents" value="5" />

28 <cost type="business_interruption" value="2" />

29 </costs>

30 </asset>

31 </assets>

32</exposureModel>

33

34</nrml>

Similarly to what was described in the previous example, the various

costs type also need to be established as per_area, but the

type of area is now defined as per_asset.

The listing below

illustrates the definition of the assets for this example.

1<?xml version="1.0" encoding="UTF-8"?>

2<nrml xmlns:gml="http://www.opengis.net/gml"

3 xmlns="http://openquake.org/xmlns/nrml/0.5">

4

5<exposureModel id="exposure_example"

6 category="buildings"

7 taxonomySource="GEM_Building_Taxonomy_2.0">

8 <description>

9 Exposure model with replacement costs per unit area;

10 and areas provided per building for each asset

11 </description>

12 <conversions>

13 <area type="per_asset" unit="SQM" />

14 <costTypes>

15 <costType name="structural" type="per_area" unit="USD" />

16 <costType name="nonstructural" type="per_area" unit="USD" />

17 <costType name="contents" type="per_area" unit="USD" />

18 <costType name="business_interruption" type="per_area" unit="USD/month"/>

19 </costTypes>

20 </conversions>

21 <assets>

22 <asset id="a1" number="3" area="400" taxonomy="Adobe" >

23 <location lon="-122.000" lat="38.113" />

24 <costs>

25 <cost type="structural" value="10" />

26 <cost type="nonstructural" value="15" />

27 <cost type="contents" value="5" />

28 <cost type="business_interruption" value="2" />

29 </costs>

30 </asset>

31 </assets>

32</exposureModel>

33

34</nrml>

In this example, the OpenQuake engine will make use of all the parameters to estimate the various costs of each asset, by multiplying the number of structural units by its average built up area, and then by the respective cost per unit area.

Example 5

In this example, additional information will be included, which is required for other risk analysis besides loss estimation, such as the benefit/cost analysis.

In order to perform a benefit/cost assessment, it is necessary to indicate the retrofitting cost. This parameter is handled in the same manner as the structural cost, and it should be stored according to the format shown in the listing below.

1<?xml version="1.0" encoding="UTF-8"?>

2<nrml xmlns:gml="http://www.opengis.net/gml"

3 xmlns="http://openquake.org/xmlns/nrml/0.5">

4

5<exposureModel id="exposure_example"

6 category="buildings"

7 taxonomySource="GEM_Building_Taxonomy_2.0">

8 <description>Exposure model illustrating retrofit costs</description>

9 <conversions>

10 <costTypes>

11 <costType name="structural" type="aggregated" unit="USD"

12 retrofittedType="per_asset" retrofittedUnit="USD" />

13 </costTypes>

14 </conversions>

15 <assets>

16 <asset id="a1" taxonomy="Adobe" number="1" >

17 <location lon="-122.000" lat="38.113" />

18 <costs>

19 <cost type="structural" value="10000" retrofitted="2000" />

20 </costs>

21 </asset>

22 </assets>

23</exposureModel>

24

25</nrml>

Despite the fact that for the demonstration of how the retrofitting cost can be stored the per building type of cost structure described in Example 1 was used, it is important to mention that any of the other cost storing approaches can also be employed (Examples 2–4).

Example 6

The OpenQuake engine is also capable of estimating human losses, based on the number of occupants in an asset, at a certain time of the day. The example Exposure Model shown in the listing below illustrates how this parameter is defined for each asset. In addition, this example also serves the purpose of presenting an Exposure Model in which three cost types have been defined using three different options.

As previously mentioned, in this example only three costs are being

stored, and each one follows a different approach. The structural

cost is being defined as the aggregate replacement cost for all of the

buildings comprising the asset (Example 1), the nonstructural value

is defined as the replacement cost per unit area where the area is

defined per building comprising the asset (Example 4), and the

contents and business_interruption values are provided per

building comprising the asset (Example 2). The number of occupants at

different times of the day are also provided as aggregated values for

all of the buildings comprising the asset.

1<?xml version="1.0" encoding="UTF-8"?>

2<nrml xmlns:gml="http://www.opengis.net/gml"

3 xmlns="http://openquake.org/xmlns/nrml/0.5">

4

5<exposureModel id="exposure_example"

6 category="buildings"

7 taxonomySource="GEM_Building_Taxonomy_2.0">

8 <description>Exposure model example with occupants</description>

9 <conversions>

10 <costTypes>

11 <costType name="structural" type="aggregated" unit="USD" />

12 <costType name="nonstructural" type="per_area" unit="USD" />

13 <costType name="contents" type="per_asset" unit="USD" />

14 <costType name="business_interruption" type="per_asset" unit="USD/month" />

15 </costTypes>

16 <area type="per_asset" unit="SQM" />

17 </conversions>

18 <assets>

19 <asset id="a1" taxonomy="Adobe" number="5" area="200" >

20 <location lon="-122.000" lat="38.113" />

21 <costs>

22 <cost type="structural" value="20000" />

23 <cost type="nonstructural" value="15" />

24 <cost type="contents" value="2400" />

25 <cost type="business_interruption" value="1500" />

26 </costs>

27 <occupancies>

28 <occupancy occupants="6" period="day" />

29 <occupancy occupants="10" period="transit" />

30 <occupancy occupants="20" period="night" />

31 </occupancies>

32 </asset>

33 </assets>

34</exposureModel>

35

36</nrml>

Example 7

Starting from OpenQuake engine27, the user may also provide a set of tags for each asset in the Exposure Model. The primary intended use case for the tags is to enable aggregation or accumulation of risk results (casualties / damages / losses) for each tag. The tags could be used to specify location attributes, occupancy types, or insurance policy codes for the different assets in the Exposure Model.

The example Exposure Model shown in the listing below illustrates how one or more tags can be defined for each asset.

1<?xml version="1.0" encoding="UTF-8"?>

2<nrml xmlns:gml="http://www.opengis.net/gml"

3 xmlns="http://openquake.org/xmlns/nrml/0.5">

4

5<exposureModel id="exposure_example_with_tags"

6 category="buildings"

7 taxonomySource="GEM_Building_Taxonomy_2.0">

8 <description>Exposure Model Example with Tags</description>

9

10 <conversions>

11 <costTypes>

12 <costType name="structural" type="per_area" unit="USD" />

13 </costTypes>

14 <area type="per_asset" unit="SQM" />

15 </conversions>

16

17 <tagNames>state county tract city zip cresta</tagNames>

18

19 <assets>

20 <asset id="a1" taxonomy="Adobe" number="5" area="100" >

21 <location lon="-122.000" lat="38.113" />

22 <costs>

23 <cost type="structural" value="10000" />

24 </costs>

25 <occupancies>

26 <occupancy occupants="20" period="day" />

27 </occupancies>

28 <tags state="California" county="Solano" tract="252702"

29 city="Suisun" zip="94585" cresta="A.11"/>

30 </asset>

31 </assets>

32

33</exposureModel>

34

35</nrml>

The list of tag names that will be used in the Exposure Model must be provided in the metadata section of the exposure file, as shown in the following snippet from the full file:

1<?xml version="1.0" encoding="UTF-8"?>

2<nrml xmlns:gml="http://www.opengis.net/gml"

3 xmlns="http://openquake.org/xmlns/nrml/0.5">

4

5<exposureModel id="exposure_example_with_tags"

6 category="buildings"

7 taxonomySource="GEM_Building_Taxonomy_2.0">

8 <description>Exposure Model Example with Tags</description>

9

10 <conversions>

11 <costTypes>

12 <costType name="structural" type="per_area" unit="USD" />

13 </costTypes>

14 <area type="per_asset" unit="SQM" />

15 </conversions>

16

17 <tagNames>state county tract city zip cresta</tagNames>

18

19 <assets>

20 <asset id="a1" taxonomy="Adobe" number="5" area="100" >

21 <location lon="-122.000" lat="38.113" />

22 <costs>

23 <cost type="structural" value="10000" />

24 </costs>

25 <occupancies>

26 <occupancy occupants="20" period="day" />

27 </occupancies>

28 <tags state="California" county="Solano" tract="252702"

29 city="Suisun" zip="94585" cresta="A.11"/>

30 </asset>

31 </assets>

32

33</exposureModel>

34

35</nrml>

The tag values for the different tags can then be specified for each asset as shown in the following snippet from the same file:

1<?xml version="1.0" encoding="UTF-8"?>

2<nrml xmlns:gml="http://www.opengis.net/gml"

3 xmlns="http://openquake.org/xmlns/nrml/0.5">

4

5<exposureModel id="exposure_example_with_tags"

6 category="buildings"

7 taxonomySource="GEM_Building_Taxonomy_2.0">

8 <description>Exposure Model Example with Tags</description>

9

10 <conversions>

11 <costTypes>

12 <costType name="structural" type="per_area" unit="USD" />

13 </costTypes>

14 <area type="per_asset" unit="SQM" />

15 </conversions>

16

17 <tagNames>state county tract city zip cresta</tagNames>

18

19 <assets>

20 <asset id="a1" taxonomy="Adobe" number="5" area="100" >

21 <location lon="-122.000" lat="38.113" />

22 <costs>

23 <cost type="structural" value="10000" />

24 </costs>

25 <occupancies>

26 <occupancy occupants="20" period="day" />

27 </occupancies>

28 <tags state="California" county="Solano" tract="252702"

29 city="Suisun" zip="94585" cresta="A.11"/>

30 </asset>

31 </assets>

32

33</exposureModel>

34

35</nrml>

Note that it is not mandatory that every tag name specified in the metadata section must be provided with a tag value for each asset.

Example 8

This example illustrates the use of multiple csv files containing the assets information, in conjunction with the metadata section in the usual xml format.

Let us take a look at the metadata section of the Exposure Model, which is listed as usual in an xml file:

1<?xml version="1.0" encoding="UTF-8"?>

2<nrml xmlns:gml="http://www.opengis.net/gml"

3 xmlns="http://openquake.org/xmlns/nrml/0.5">

4

5<exposureModel id="exposure_example_with_csv_files"

6 category="buildings"

7 taxonomySource="GEM_Building_Taxonomy_3.0">

8 <description>Exposure Model Example with CSV Files</description>

9

10 <conversions>

11 <costTypes>

12 <costType name="structural" type="aggregated" unit="USD" />

13 <costType name="nonstructural" type="aggregated" unit="USD" />

14 <costType name="contents" type="aggregated" unit="USD" />

15 </costTypes>

16 <area type="per_asset" unit="SQFT" />

17 </conversions>

18

19 <occupancyPeriods>night</occupancyPeriods>

20

21 <tagNames>occupancy state_id state county_id county tract</tagNames>

22

23 <assets>

24 Washington.csv

25 Oregon.csv

26 California.csv

27 </assets>

28

29</exposureModel>

30

31</nrml>

As in all previous examples, the information in the metadata section is common to all of the assets in the portfolio.

The asset data can be provided in one or more csv files. The path to

each of the csv files containing the asset data must be listed between

the <assets> and </assets> xml tags.

In the example shown above, the exposure information is provided in three csv files, Washington.csv, Oregon.csv, and California.csv. To illustrate the format of the csv files, we have shown below the header and first few lines of the file Washington.csv in Table 3.1.

id |

lon |

lat |

taxonomy |

number |

structural |

area |

occupancy |

state |

county |

|---|---|---|---|---|---|---|---|---|---|

A1 |

-122.7 |

46.5 |

AGR1-W1-LC |

7.6 |

898000 |

18 |

Agr |

Washington |

Lewis County |

A2 |

-122.7 |

46.5 |

AGR1-PC1-LC |

0.6 |

67000 |

1 |

Agr |

Washington |

Lewis County |

A3 |

-122.7 |

46.5 |

AGR1-C2L-PC |

0.6 |

67000 |

1 |

Agr |

Washington |

Lewis County |

A4 |

-122.7 |

46.5 |

AGR1-PC1-PC |

1.5 |

179000 |

4 |

Agr |

Washington |

Lewis County |

A5 |

-122.7 |

46.5 |

AGR1-S2L-LC |

0.6 |

67000 |

1 |

Agr |

Washington |

Lewis County |

A6 |

-122.7 |

46.5 |

AGR1-S1L-PC |

1.1 |

133000 |

3 |

Agr |

Washington |

Lewis County |

A7 |

-122.7 |

46.5 |

AGR1-S2L-PC |

1.5 |

182000 |

4 |

Agr |

Washington |

Lewis County |

A8 |

-122.7 |

46.5 |

AGR1-S3-PC |

1.1 |

133000 |

3 |

Agr |

Washington |

Lewis County |

A9 |

-122.7 |

46.5 |

AGR1-RM1L-LC |

0.6 |

68000 |

1 |

Agr |

Washington |

Lewis County |

Note that the xml metadata section for exposure models provided using

csv files must include the xml tag <occupancyPeriods> listing the

periods of day for which the number of occupants in each asset will be

listed in the csv files. In case the number of occupants are not listed

in the csv files, a self-closing tag <occupancyPeriods /> should be

included in the xml metadata section.

A web-based tool to build an Exposure Model in the Natural hazards’ Risk Markup Language schema starting from a csv file or a spreadsheet can be found at the OpenQuake platform at the following address: https://platform.openquake.org/ipt/.

3.2.2. Fragility Models#

This section describes the schema currently used to store fragilitymodels, which are required for the Scenario Damage Calculator and the Classical Probabilistic Seismic Damage Calculator. In order to perform probabilistic or scenario damage calculations, it is necessary to define a Fragility Function for each building typology present in the Exposure Model. A Fragility Model defines a set of fragilityfunctions, describing the probability of exceeding a set of limit, or damage, states. The fragilityfunctions can be defined using either a discrete or a continuous format, and the Fragility Model file can include a mix of both types of fragilityfunctions.

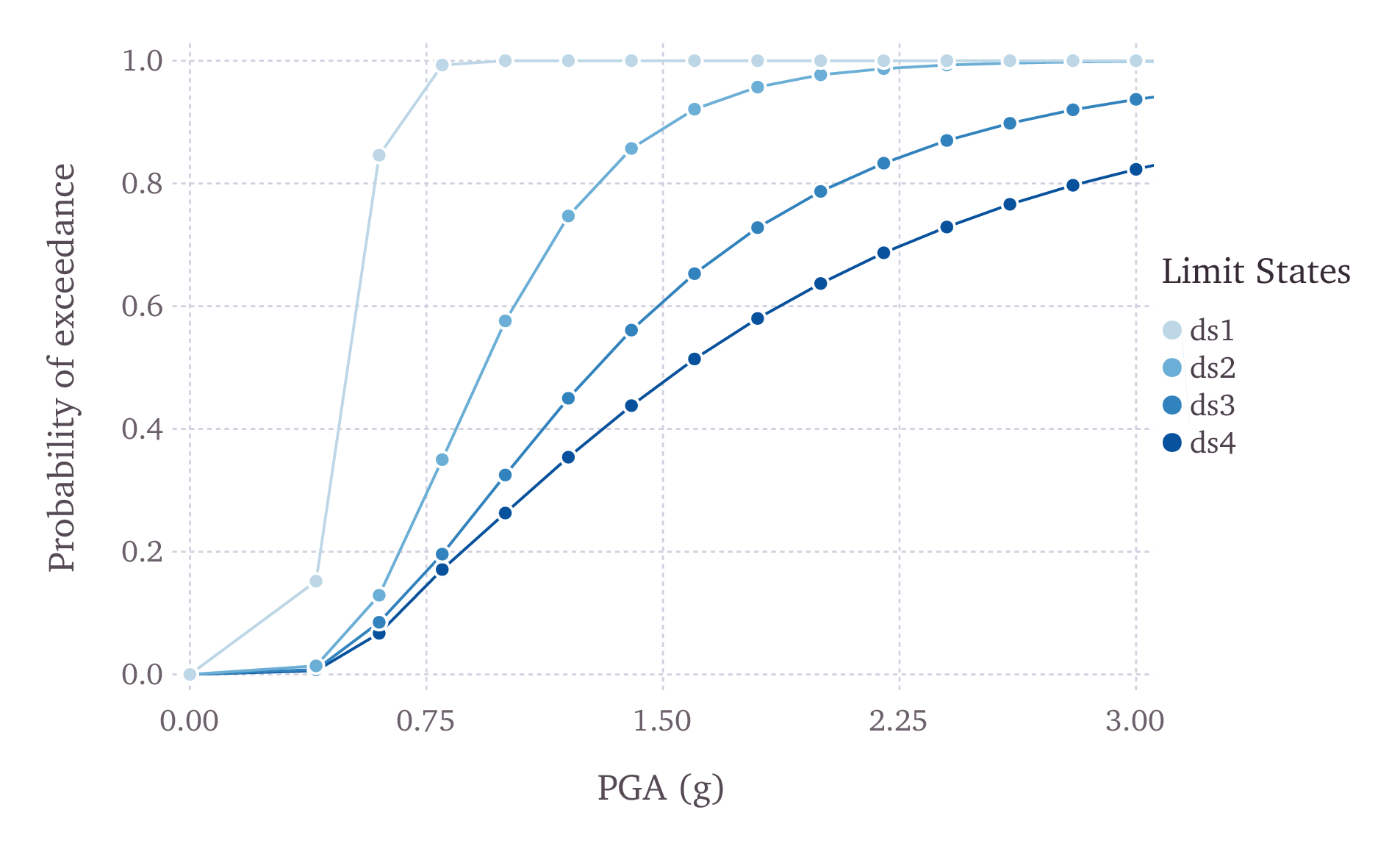

For discrete fragilityfunctions, sets of probabilities of exceedance (one set per limit state) are defined for a list of intensity measure levels, as illustrated in Fig. 3.13.

Fig. 3.13 Graphical representation of a discrete fragility model#

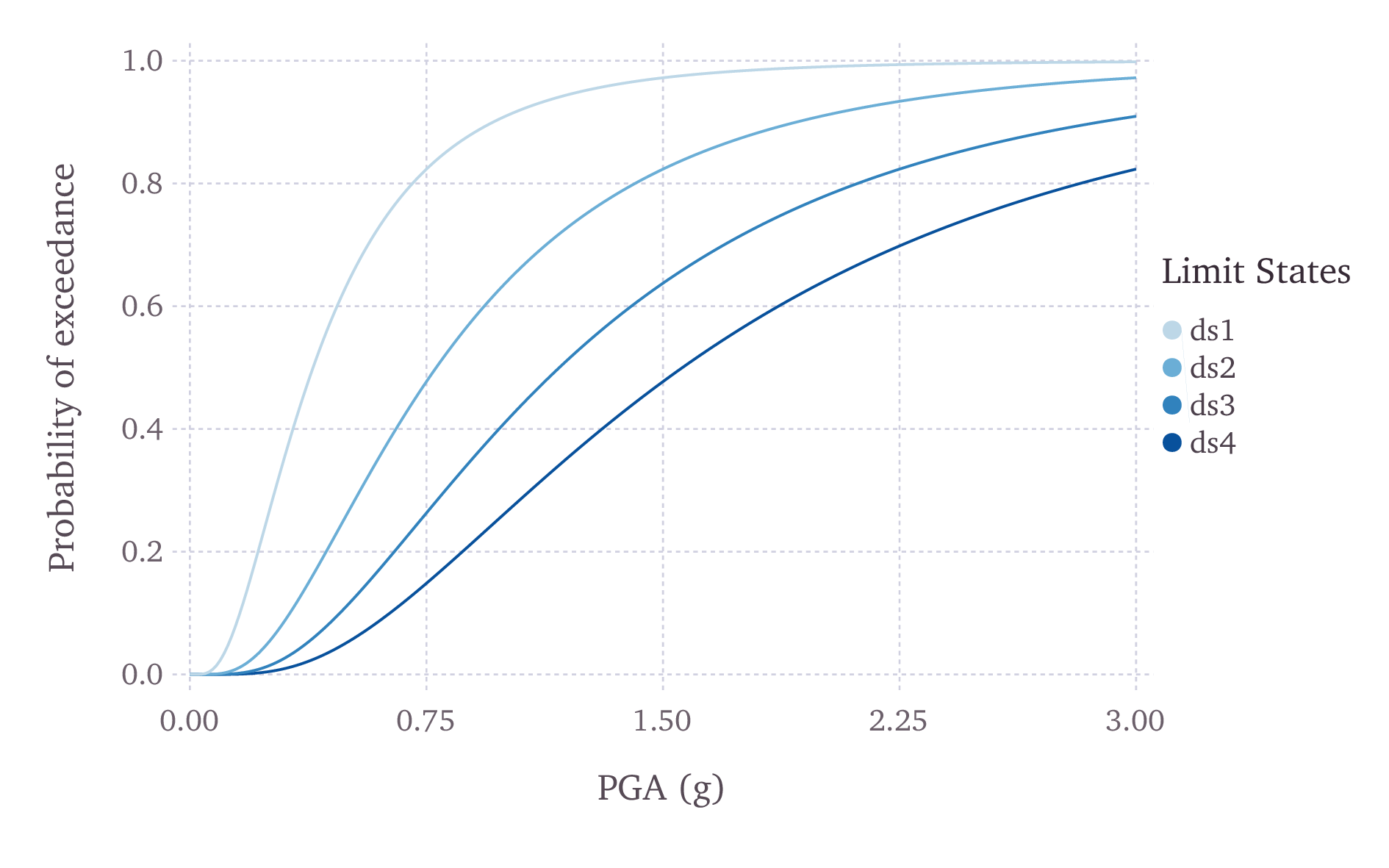

The fragilityfunctions can also be defined as continuous functions, through the use of cumulative lognormal distribution functions. In Fig. 3.14, a continuous Fragility Model is presented.

Fig. 3.14 Graphical representation of a continuous fragility model#

An example Fragility Model comprising one discrete Fragility Function and one continuous Fragility Function is shown in the listing below.

1<?xml version="1.0" encoding="UTF-8"?>

2<nrml xmlns="http://openquake.org/xmlns/nrml/0.5">

3

4<fragilityModel id="fragility_example"

5 assetCategory="buildings"

6 lossCategory="structural">

7

8 <description>Fragility Model Example</description>

9 <limitStates>slight moderate extensive complete</limitStates>

10

11 <fragilityFunction id="Woodframe_TwoStorey" format="discrete">

12 <imls imt="PGA" noDamageLimit="0.05">0.005 0.2 0.4 0.6 0.8 1.0 1.2</imls>

13 <poes ls="slight">0.00 0.01 0.15 0.84 0.99 1.00 1.00</poes>

14 <poes ls="moderate">0.00 0.00 0.01 0.12 0.35 0.57 0.74</poes>

15 <poes ls="extensive">0.00 0.00 0.00 0.08 0.19 0.32 0.45</poes>

16 <poes ls="complete">0.00 0.00 0.00 0.06 0.17 0.26 0.35</poes>

17 </fragilityFunction>

18

19 <fragilityFunction id="RC_LowRise" format="continuous" shape="logncdf">

20 <imls imt="SA(0.3)" noDamageLimit="0.05" minIML="0.0" maxIML="5.0"/>

21 <params ls="slight" mean="0.50" stddev="0.10"/>

22 <params ls="moderate" mean="1.00" stddev="0.40"/>

23 <params ls="extensive" mean="1.50" stddev="0.90"/>

24 <params ls="complete" mean="2.00" stddev="1.60"/>

25 </fragilityFunction>

26

27</fragilityModel>

28

29</nrml>

The initial portion of the schema contains general information that describes some general aspects of the Fragility Model. The information in this metadata section is common to all of the functions in the Fragility Model and needs to be included at the beginning of every Fragility Model file. The parameters of the metadata section are shown in the snippet below and described after the snippet:

4<fragilityModel id="fragility_example"

5 assetCategory="buildings"

6 lossCategory="structural">

7

8 <description>Fragility Model Example</description>

9 <limitStates>slight moderate extensive complete</limitStates>

id: mandatory; a unique string used to identify the Fragility Model. This string can contain letters (a–z; A–Z), numbers (0–9), dashes (-), and underscores (_), with a maximum of 100 characters.assetCategory: an optional string used to specify the type of assets for which fragilityfunctions will be defined in this file (e.g: buildings, lifelines).lossCategory: mandatory; valid strings for this attribute are “structural”, “nonstructural”, “contents”, and “business_interruption”.description: mandatory; a brief string (ASCII) with further relevant information about the Fragility Model, for example, which building typologies are covered or the source of the functions in the Fragility Model.limitStates: mandatory; this field is used to define the number and nomenclature of each limit state. Four limit states are employed in the example above, but it is possible to use any number of discrete states, as long as a fragility curve is always defined for each limit state. The limit states must be provided as a set of strings separated by whitespaces between each limit state. Each limit state string can contain letters (a–z; A–Z), numbers (0–9), dashes (-), and underscores (_). Please ensure that there is no whitespace within the name of any individual limit state.

The following snippet from the above Fragility Model example file defines a discrete Fragility Function:

19 <fragilityFunction id="Woodframe_TwoStorey" format="discrete">

20 <imls imt="PGA" noDamageLimit="0.05">0.005 0.2 0.4 0.6 0.8 1.0 1.2</imls>

21 <poes ls="slight">0.00 0.01 0.15 0.84 0.99 1.00 1.00</poes>

22 <poes ls="moderate">0.00 0.00 0.01 0.12 0.35 0.57 0.74</poes>

23 <poes ls="extensive">0.00 0.00 0.00 0.08 0.19 0.32 0.45</poes>

24 <poes ls="complete">0.00 0.00 0.00 0.06 0.17 0.26 0.35</poes>

25 </fragilityFunction>

The following attributes are needed to define a discrete Fragility Function:

id: mandatory; a unique string used to identify the taxonomy for which the function is being defined. This string is used to relate the Fragility Function with the relevant asset in the Exposure Model. This string can contain letters (a–z; A–Z), numbers (0–9), dashes (-), and underscores (_), with a maximum of 100 characters.format: mandatory; for discrete fragilityfunctions, this attribute should be set to “discrete”.imls: mandatory; this attribute specifies the list of intensity levels for which the limit state probabilities of exceedance will be defined. In addition, it is also necessary to define the intensity measure type (imt). Optionally, anoDamageLimitcan be specified, which defines the intensity level below which the probability of exceedance for all limit states is taken to be zero.poes: mandatory; this field is used to define the probabilities of exceedance (poes) for each limit state for each discrete Fragility Function. It is also necessary to specify which limit state the exceedance probabilities are being defined for using the attributels. The probabilities of exceedance for each limit state must be provided on a separate line; and the number of exceedance probabilities for each limit state defined by thepoesattribute must be equal to the number of intensity levels defined by the attributeimls. Finally, the number and names of the limit states in each fragility function must be equal to the number of limit states defined earlier in the metadata section of the Fragility Model using the attributelimitStates.

The following snippet from the above Fragility Model example file defines a continuous Fragility Function:

11 <fragilityFunction id="RC_LowRise" format="continuous" shape="logncdf">

12 <imls imt="SA(0.3)" noDamageLimit="0.05" minIML="0.0" maxIML="5.0"/>

13 <params ls="slight" mean="0.50" stddev="0.10"/>

14 <params ls="moderate" mean="1.00" stddev="0.40"/>

15 <params ls="extensive" mean="1.50" stddev="0.90"/>

16 <params ls="complete" mean="2.00" stddev="1.60"/>

17 </fragilityFunction>

The following attributes are needed to define a continuous Fragility Function:

id: mandatory; a unique string used to identify the taxonomy for which the function is being defined. This string is used to relate the Fragility Function with the relevant asset in the Exposure Model. This string can contain letters (a–z; A–Z), numbers (0–9), dashes (-), and underscores (_), with a maximum of 100 characters.format: mandatory; for continuous fragilityfunctions, this attribute should be set to “continuous”.shape: mandatory; for continuous fragilityfunctions using the lognormal cumulative distrution, this attribute should be set to “logncdf”. At present, only the lognormal cumulative distribution function can be used for representing continuous fragilityfunctions.imls: mandatory; this element specifies aspects related to the intensity measure used by the the Fragility Function. The range of intensity levels for which the continuous fragilityfunctions are valid is specified using the attributesminIMLandmaxIML. In addition, it is also necessary to define the intensity measure typeimt. Optionally, anoDamageLimitcan be specified, which defines the intensity level below which the probability of exceedance for all limit states is taken to be zero.params: mandatory; this field is used to define the parameters of the continuous curve for each limit state for this Fragility Function. For a lognormal cumulative distrbution function, the two parameters required to specify the function are the mean and standard deviation of the intensity level. These parameters are defined for each limit state using the attributesmeanandstddevrespectively. The attributelsspecifies the limit state for which the parameters are being defined. The parameters for each limit state must be provided on a separate line. The number and names of the limit states in each Fragility Function must be equal to the number of limit states defined earlier in the metadata section of the Fragility Model using the attributelimitStates. A point worth clarifying is that the parameters to be defined in the fragility input file are the mean and standard deviation of the intensity measure level (IML) for each damage state, and not the mean and standard deviation of log(IML). Thus, if the intensity measure is PGA or SA for instance, the units for the input parameters will be ’g’.

Note that the schema for representing fragilitymodels has changed between Natural hazards’ Risk Markup Language v0.4 (used prior to OpenQuake engine17) and Natural hazards’ Risk Markup Language v0.5 (introduced in OpenQuake engine17).

A deprecation warning is printed every time you attempt to use a

Fragility Model in the old Natural hazards’ Risk Markup Language v0.4 format in an OpenQuake engine17 (or

later) risk calculation. To get rid of the warning you must upgrade the

old fragilitymodels files to Natural hazards’ Risk Markup Language v0.5. You can use the command

upgrade_nrml with oq to do this as follows:

user@ubuntu:~$ oq upgrade_nrml <directory-name>

The above command will upgrade all of your old Fragility Model files to

Natural hazards’ Risk Markup Language v0.5. The original files will be kept, but with a .bak

extension appended. Notice that you will need to set the

lossCategory attribute to its correct value manually. This is easy

to do, since if you try to run a computation you will get a clear error

message telling the expected value for the lossCategory for each

file.

Several methodologies to derive fragilityfunctions are currently being evaluated by GEM Foundation and have been included as part of the Risk Modeller’s Toolkit, the code for which can be found on a public repository at GitHub at the following address: gemsciencetools/rmtk.

A web-based tool to build a Fragility Model in the Natural hazards’ Risk Markup Language schema are also under development, and can be found at the OpenQuake platform at the following address: https://platform.openquake.org/ipt/.

3.2.3. Consequence Models#